The Evolution Of Voice AI: Why AI Voice Feels More Human Than Ever

Voice AI has evolved from robotic monotones to voices so natural they’re often indistinguishable from humans. Powered by neural networks, vast training data, and a deep understanding of emotion and context, modern AI voices feel...real. From Google Duplex’s human-like pauses to Microsoft’s VALL-E cloning voices in seconds, the technology has become a bridge for authentic connection. This blog explores the journey, breakthroughs, and future of Voice AI, and why it’s redefining how we communicate.

There was a time when you could spot a robotic voice from three words away, tinny, monotone, and lacking any semblance of personality. But somewhere between the mechanical speech boxes of the mid-20th century and the emotionally expressive voice assistants of today, a transformation occurred. Synthetic speech became clearer and it became convincingly human. The evolution of voice ai was underway.

In 2018, Google’s AI assistant made a phone call to book a hair appointment, complete with pauses, filler sounds, and the kind of natural tone that left the person on the other end unaware they were speaking to a machine. In 2022, AI restored Val Kilmer’s voice for Top Gun: Maverick, allowing him to speak on screen despite having lost his real voice to illness. And in 2023, Microsoft’s VALL-E demonstrated voice cloning with as little as three seconds of audio, raising both eyebrows and expectations.

What’s behind this sudden leap in realism? It's the rise of neural networks, the explosion of training data, and a shift in how we understand human speech, not as a set of sounds, but as an assortment of emotion, rhythm, and context. Voice AI today mirrors the very nuance of how we speak, think, and connect.

In this article, we trace the evolution of voice AI: how it began, the key breakthroughs that made it human-like, and why today’s AI-generated voices are not only indistinguishable from human ones but in many cases, preferred. Along the way, we’ll explore the ethics, opportunities, and future of a technology that’s quietly changing how the world communicates.

Timeline of Voice AI

For decades, synthetic speech lingered in the uncanny valley… recognisably robotic, occasionally impressive, but never truly human. That began to change when researchers stopped trying to manually engineer the rules of speech and instead taught machines to learn from us. What follows is a realistic, research-backed timeline capturing the turning points that brought us from stilted syllables to near-perfect mimicry.

1939 - 1960s: The Birth of Synthetic Speech

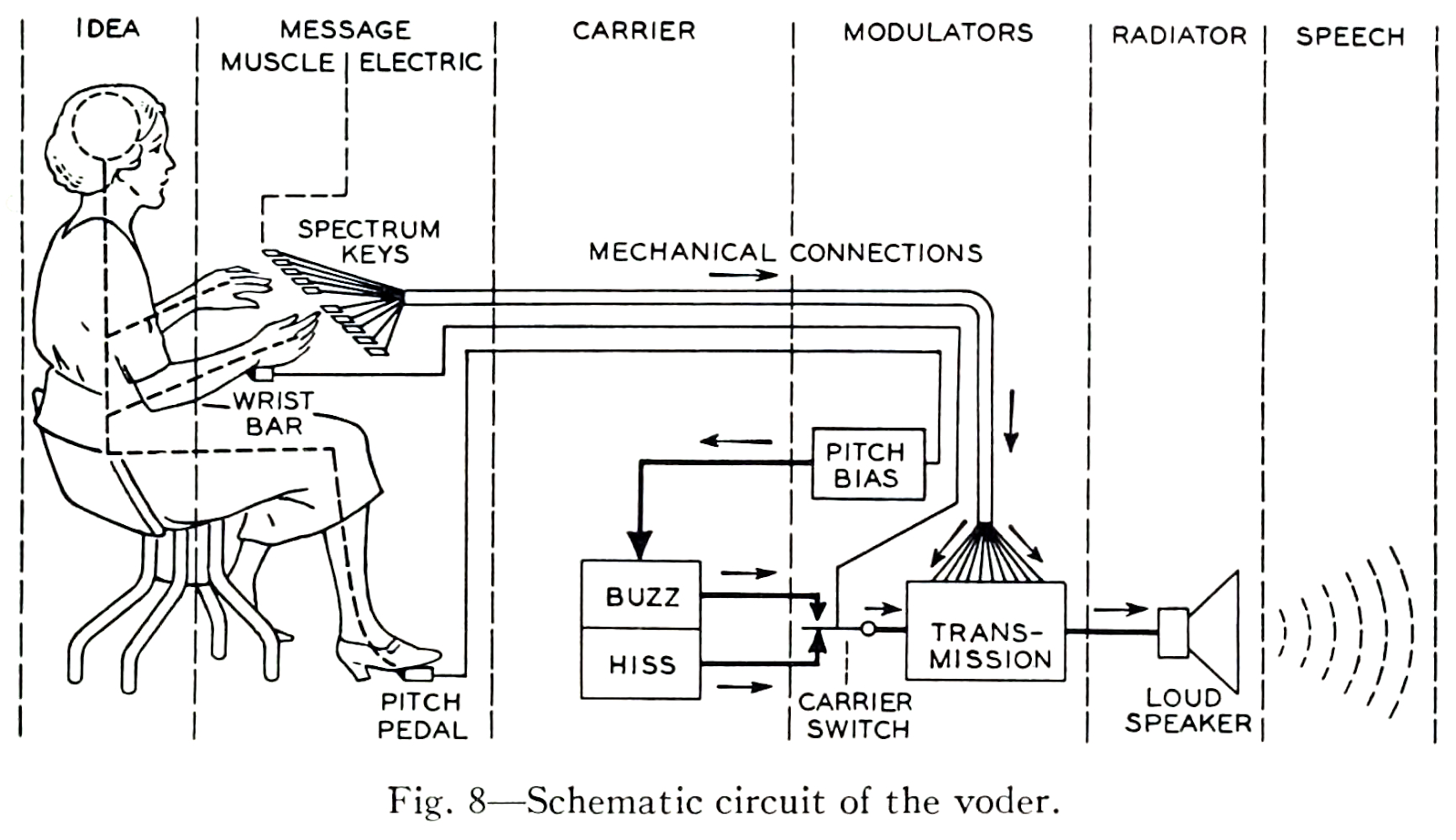

1939 – Bell Labs introduced the Voder, the world’s first electronic speech synthesiser. Operated manually using a keyboard and foot pedal, it was far from natural but marked a foundational moment in machine-generated speech1.

Bell System Technical Journal 1940, p. 509, Fig.8 Schematic circuit of the voder

- 1961 – An IBM 7094 mainframe at Bell Labs sang “Daisy Bell,” becoming the first computer to sing a song. This demo later inspired HAL 9000’s eerie farewell in 2001: A Space Odyssey.

- 1968 – Japan developed the first general-purpose English text-to-speech (TTS) system, cementing the shift from novelty to research discipline.

These early systems were rule-based relying on formant synthesis, which simulated how vocal tracts create sound. The output was intelligible, but unmistakably artificial.

1970s - 1990s: From Rules to Recordings

- 1978 – Texas Instruments’ Speak & Spell brought TTS to homes, using a formant-based chip to help children learn pronunciation.

- 1984 – DECtalk was released, providing synthetic voices for accessibility and general computing. Stephen Hawking famously used it throughout his life, giving the voice near-iconic status.

Source: howitworksdaily.com.

- 1990s – Enter concatenative synthesis, where pre-recorded human speech was sliced into phonemes or syllables and “stitched” together in real time. The results were far smoother and more natural than formant-based systems at least when the correct units were available.

Concatenative systems brought a leap in clarity but were limited by the size of the audio database. Missed recordings or unusual sentence structures often broke the illusion.

2000s: Statistical Models and Early Scalability

- Early 2000s – Hidden Markov Models (HMMs) became popular for statistical parametric synthesis, which generated speech based on probability models rather than audio snippets.

- This allowed for greater flexibility, voices could be modified by adjusting parameters rather than re-recording entire datasets. But they often lacked texture and emotional tone, resulting in flat or “buzzy” speech.

- HMM-based TTS was widely used in early GPS systems, screen readers, and even the first-generation Google Translate voice.

The trade-off was clear: you could scale easily, but the output didn’t quite pass for human.

2016–2019: Deep Learning Changes Everything

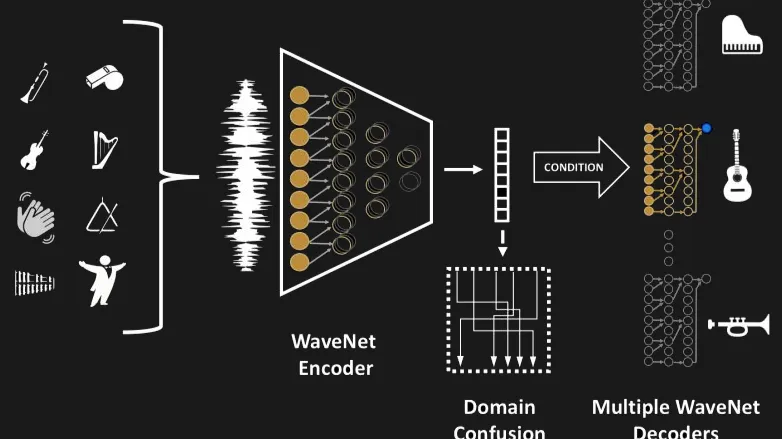

- 2016 – DeepMind unveiled WaveNet, a generative model that produced raw audio waveforms sample by sample. It captured the subtle nuances of speech, intonation, breath, articulation and significantly raised the bar for voice quality8.

- 2017 – Google launched Tacotron, an end-to-end neural TTS model that converted text into spectrograms with uncanny naturalness. Paired with vocoders like WaveNet, it allowed fully learned speech generation.

- 2018 – Google Duplex debuted: an AI voice assistant that could book appointments over the phone, using “ums” and “mm-hmms” so convincingly it shocked the audience and the person on the other end.

Neural voices performed text. For the first time, machines could match a human’s rhythm, pitch, and tone. It was a synthetic voice and presence.

2020s: Cloning, Emotion, and Multilingual Expression

- 2020–2022 – Big Tech deployed neural TTS at scale. Alexa, Siri, Google Assistant, and Microsoft Azure all transitioned to more natural-sounding neural voices.

- 2023 – Microsoft Research released VALL-E, a Transformer-based model that could replicate a person’s voice from a three-second sample preserving not just tone, but emotion and even ambient acoustics.

- Today’s systems go far beyond pronunciation. They can:

- Replicate emotions (joy, disappointment, curiosity)

- Imitate celebrity voices and fictional characters

- Switch languages and accents mid-sentence

- Reflect intonation based on context

Voice AI has crossed a threshold from intelligent soundboard to full-spectrum conversationalist. And the line between human and synthetic is now more blurred than ever.

Why AI Voices Feel Human Today

For decades, the goal of speech synthesis was simple: make machines intelligible. Today, the goal is far more ambitious, making them indistinguishable. What changed? In short: everything.

Modern voice AI feels human not because it mimics speech, but because it understands it. Neural networks string syllables together, they learn how we speak, why we pause, and what emotions live between the lines. The result is output and performance.

We Respond to Emotion & Not Accuracy

Older text-to-speech systems prioritised clarity. Modern voice AI prioritises authenticity. It’s about pronouncing every syllable precisely; it's about adding the right pause, injecting warmth, or letting just enough breath into a phrase to make it feel… real.

- A slightly imperfect but emotionally expressive sentence often feels more human than a flawlessly robotic one.

- This shift in design philosophy from mechanical fluency to emotional believability; is why AI voices today don’t just talk at us. They speak to us.

The science checks out: Research in speech psychology shows that listeners are more likely to trust and engage with voices that mirror human affect even if they’re synthetic.

The Human Voice Is Reimagined

In a world where conversations increasingly start without a human on the other end, voice AI has become a mirror. A mirror of our tone, our rhythm, our emotion. It reflects how we speak, but more importantly, how we want to be heard.

What began as an attempt to make machines intelligible has grown into an industry intent on making them relatable. Today’s voice AI builds trust, evokes empathy, and powers connection at scale.

But as the lines between human and synthetic blur, one truth remains: technology may mimic us, but it’s our responsibility to decide what it speaks for. Whether it's giving someone back the ability to speak, building a more inclusive user experience, or scaling support without losing warmth, voice AI is only as powerful as the intention behind it.

At VerbaFlo, we believe that intention matters. We build voice experiences that are smart, sensitive, secure, and deeply human. Because in every syllable spoken, there’s an opportunity to do more than communicate. There’s a chance to connect.

References:

- Google Duplex. (2018). Google I/O Live Demo: Booking a Hair Appointment with AI. Retrieved from Google I/O ↩

- Variety. (2022). Val Kilmer’s Voice in Top Gun: Maverick Was Created by AI. Retrieved from Variety ↩

- Microsoft Research. (2023). VALL-E: Neural Codec Language Models for Zero-Shot Text-to-Speech Synthesis. Retrieved from Microsoft ↩

- Dudley, H., Riesz, R. R., & Watkins, S. (1939). The Voder (Voice Operation Demonstrator) – Bell Labs ↩

- IBM Archives. (1961). Daisy Bell (Bicycle Built for Two) – The first computer-sung song ↩

- Nagao, M. (1968). English TTS Development in Japan – NICT ↩

- Texas Instruments. (1978). Speak & Spell Product Release ↩

- Computer History Museum. (2014). DECtalk and Stephen Hawking’s Voice ↩

- Hunt, A., & Black, A. (1996). Unit Selection in a Concatenative Speech Synthesis System – ICASSP ↩

- Tokuda, K., Yoshimura, T., Masuko, T., & Zen, H. (2002). HMM-Based Speech Synthesis – IEEE ↩

- Van Den Oord, A. et al. (2016). WaveNet: A Generative Model for Raw Audio – DeepMind ↩

- Wang, Y. et al. (2017). Tacotron: Towards End-to-End Speech Synthesis – Google Research ↩

- Google I/O. (2018). Google Duplex AI Demonstration ↩

- Wang, Y. et al. (2023). VALL-E: Zero-Shot Text-to-Speech with In-Context Learning – Microsoft Research ↩

Ready to hear it for yourself?

Get a personalized demo to learn how VerbaFlo can help you drive measurable business value.

You may also like

Ready to hear it for yourself?

Get a personalized demo to learn how VerbaFlo can help you drive measurable business value.

.png)